This is a report detailing the design of Web Infrastructure's hosting platform as of 2021.

You'll find a succinct list of the technologies used here.

This gets dense.

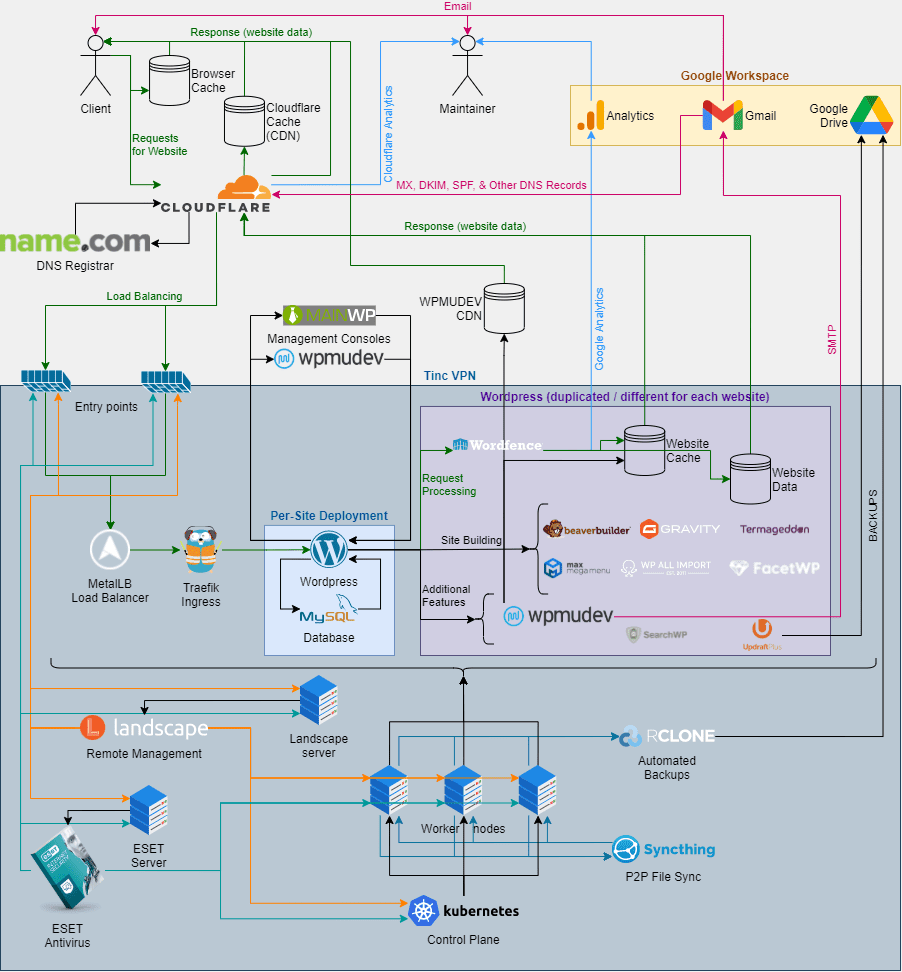

Diagram

Overview

Only two server tiers were used: production (prod) and development (dev). Development servers were usually in-house machines that were spun up and down as needed. The hardware of these servers varied dramatically as did their network speeds.

Production servers were rented from reputable web-hosting providers, including InterServer, Liquid Web, and Dream Host. These hosting providers could have changed at any time and, at one point, included Kualo and Digital Ocean. Servers with NVMe drives and larger RAM capacities were preferred. All providers were required to have data centers in the United States of America as well as access to Tier-1 network backbones with no more than 200ms round-trip-time (RTT) from Reno, NV. This was done to minimize website load times and additionally ensured data replication took place in a timely manner. Decreasing the latency between servers dramatically increased website performance and backup reliability.

Each server ran an Ubuntu 20.04 LTS operating system when possible (some management software, like Canonical’s Landscape only ran on Ubuntu 16.04 LTS). Other operating systems, especially linux distributions, could have been used but were avoided in order to simplify the maintenance needs of all servers.

Servers communicated with each other over Tinc (https://www.tinc-vpn.org/) using 4096 bit RSA encryption. This created a Virtual Private Network (VPN), which allowed for the creation of a more restrictive, public-facing firewall and open communication (i.e. little to no firewalling) between servers. A layer 2 network (https://en.m.wikipedia.org/wiki/OSI_model) was used for server communication and a layer 3 network was used for administration; however, the admin network was only necessary to work around a bug in android's vpn api design which prohibited mobile users from connecting to layer 2 networks (mobile access was desired for emergency maintenance while traveling, etc.).

The use of a VPN in this manner mirrored a typical on-premises deployment. For simplicity, applications running on servers within this VPN will be referred to as “on-prem” and services that were not exposed to the open internet will be said to be “only on-prem”.

Specialty servers were provisioned with more advanced firewall rules (e.g. Config Server Firewall (CSF) (https://configserver.com/cp/csf.html). These servers provided routing to services within the VPN such that those services could be exposed to the open internet. These will be referred to as "entry-point" servers.

For antivirus, each server had ESET File Security (https://help.eset.com/efs/8/en-US/) installed and was connected to an instance of ESET PROTECT (https://help.eset.com/protect_install/81/en-US/) which was running only on-prem. Regular scans were run in an alternating fashion (by using a random delay between starting scans on each server). Coupling this with real-time protection ensured any malware was caught and removed as soon as possible.

All Ubuntu 20.04 servers were managed by Canonical’s Landscape (https://landscape.canonical.com/). Primarily, this helped to orchestrate server updates and provide information on the health of each server. Landscape was deployed only on-prem.

Files, including databases, were synced between servers using Syncthing (https://syncthing.net/) which ran only on-prem and synchronized files every 30 minutes. This free software enabled peer-to-peer (p2p) file replication between all servers and was used to backup websites in a way that made site migrations between servers almost trivial. There were still flaws with this technology including file conflicts when servers had been offline for a long time and when sites were updated in the middle of being migrated. Syncthing should have been replaced with a more appropriate file-synchronization technology; however, none existed at the time.

Files were additionally backed up to Google Drive using Rclone (https://rclone.org/) every hour. Rclone was set up on each server and was used as a repository for backups of database snapshots. No data was transferred across on-prem servers by Rclone or Google Drive. Snapshots were taken via a custom script that was run by cron (https://en.wikipedia.org/wiki/Cron).

Note: Dropbox supported both the synchronization technology of Syncthing as well as the cloud backup abilities of Google Drive and Rclone. However, Dropbox did not function within a VPN due to the restrictive, public-facing firewall and Dropbox’s inability to operate over a specified network interface.

Services (mostly websites) were managed through an only on-prem deployment of Kubernetes (K8s) (https://kubernetes.io/). Kubernetes required a “control plane” which could have been any number of servers (i.e. a multi-master configuration). Other servers were added to the K8s cluster as “worker nodes”. These were responsible for doing the actual web hosting. K8s worker nodes were labeled using "dev" and "prod" tags in order to facilitate the scheduling of development and production services. This ensured that production sites did not get placed on less reliable, development servers and that development sites did not consume the more expensive, production resources.

Because data was maintained across servers, through the methods mentioned above, it was easier to use only a single server as the K8s controller for the cluster. If the cluster were to have experienced a fatal error, suffered an attack, or been taken down for any other reason, it would have been easy to restore existing functionality by redeploying K8s with a patched configuration and / or using a different server. In the past, cluster misconfigurations (e.g. due to improperly applied updates) caused cluster-wide failures when using "highly available" (aka "multi-master") deployments. This stopped being an issue once the data were separated from the services and a single, easily redeployed controller policy was instituted.

Each website existed as a containerized application (for more info, see resources like: https://www.ibm.com/cloud/learn/containerization). The deployment (https://kubernetes.io/docs/concepts/workloads/controllers/deployment/) consisted of two pods (https://kubernetes.io/docs/concepts/workloads/pods/) one for the MySQL database and one for WordPress. Each pod was given, at minimum, 0.25 vCPU and 0.25 GB RAM, for a total per-website minimum resource consumption of 0.5 vCPU and 0.5GB RAM. Each pod could use up to 2vCPU and 2GB RAM; so, in times of high demand, a website could have used up to 4vCPU and 4GB RAM. This proved to be sufficient for the loads encountered by all websites.

Globally distributing an on-prem cluster necessitated that each website be served by only a single worker node at any given time. Otherwise, the high latency between site instances would cause conflicting updates and database integrity would eventually degrade. To further enhance site speed, each WordPress pod was always scheduled on the same server as the MySQL pod for the respective website. This eliminated the overhead created by high RTTs and dramatically increased site performance.

When a user visited a website, traffic was routed first through Cloudflare (https://www.cloudflare.com/) which provided DNS level security, caching, and analytics. Cloudflare then sent the user's request to one of the aforementioned entry-point servers via load balancing, typically round-robin or location-aware (https://en.m.wikipedia.org/wiki/Load_balancing_(computing)). The entry-point server then forwarded the request to a layer 2 load balancer within the VPN, created by a MetalLB (https://metallb.universe.tf/) deployment on K8s. That load balancer was backed by the Traefik service (https://traefik.io/), also on K8s which was responsible for handling cluster ingress. Once the request reached the appropriate web-hosting container it was processed and responded to as a traditional web server would.

As already mentioned, all websites were built with WordPress (https://wordpress.org/). This greatly simplified the development process and gave website maintainers a consistent user-interface. All websites used the Beaver Builder Theme (https://www.wpbeaverbuilder.com/wordpress-framework-theme/).

all websites were managed through both the WPMUDEV Hub (https://wpmudev.com/hub-welcome/) as well as a MainWP instance (https://mainwp.com/), which was hosted by WPMUDEV (instead of the Web Infrastructure). Both of these services aided in automatically updating all websites as well as provided a means of applying almost arbitrary changes to any set of websites. These management consoles greatly reduced the workload of maintaining multiple websites.

Customer engagement was measured through both Google and Cloudflare Analytics. Website uptime was measured by both WPMUDEV and Uptime Robot (https://uptimerobot.com/). Accessibility feedback was given by Google Lighthouse and SmartCrawl Pro as well as Site Improve (https://siteimprove.com/), for the clients whom were a part of the University of Nevada, Reno. The information provided by these applications was used to inform what manual updates were required for each website and the infrastructure as a whole.

Email for all domains was centrally managed through Google. A single “noreply” Google Workspace (https://workspace.google.com/) user account was shared between all websites. The noreply account had an alias for noreply@DOMAIN where DOMAIN was the domain name of the website. Each site used Branda Pro to authenticate to smtp.gmail.com using TLS with a username and app token. This allowed each website to send emails from their own domain with the security and legal agreements in place with Google while also keeping costs low. Additional inboxes for humans (e.g. billing@DOMAIN) were created as Google Groups.

All emails passed SPF (https://en.wikipedia.org/wiki/Sender_Policy_Framework), DKIM (https://en.wikipedia.org/wiki/DomainKeys_Identified_Mail), and DMARC (https://en.wikipedia.org/wiki/DMARC). This was made easy by the combined use of Cloudflare and Google. Custom python scripts were also written, leveraging Cloudflare’s python API, to remove the manual labor of updating DMARC policies and other DNS records for all domains.

Domain Name System

Domain Name System (DNS) registration was managed through several registrars, including name.com (previously Dyn DNS), Google Domains, and Namecheap. Each domain name used Cloudflare’s nameservers, so that DNS records could be easily managemed. DNSSEC (https://www.icann.org/resources/pages/dnssec-what-is-it-why-important-2019-03-05-en) was enabled through Cloudflare, using Algorithm 13 DS records.

Challenges

Coordinating globally distributed servers was done for many years prior to the start of this endeavor. However, the rise in popularity of open source software and containerized applications finally made this project both affordable and stable for the common consumer.

The networking requirements for this endeavor were cumbersome and infrastructure designs which followed this globally distributed scheme could only be reasonably deployed over single continents. For example, if etcd (https://etcd.io/), the database backend for K8s, did not handle packet latencies in excess of 100ms, a multi-master deployment in the United States would not have been possible. Similarly, if any component of Kubernetes required strong data consistency global distribution would have failed outright especially when taking encryption overhead into account. However, the platform proved stable within North America and should continue to become stronger as the open source ecosystem and humanity's interconnectedness grow.

Data consistency and fidelity were directly proportional to network speed and network reliability. This is why fast and stable hardware was necessary to make this approach work.

The WordPress plugins used required technical knowledge to set up and employ properly in concert. For example, WPMUDEV’s Defender Pro made denial of service attacks trivial when used with a cluster ingress controller. This was caused by the requester’s ip being changed to an internal ip (either the ip of the entry-point server or the K8s service) which was banned upon any number of triggers (e.g. high 404 count). In this particular case, Wordfence was used as the sole firewall plugin as it read the proper originating ip from the request and could thus block the appropriate traffic. If Defender Pro was not packaged with the other WPMUDEV plugins it would not have been purchased. However, because it was available and did not conflict with other functionality, Defender Pro’s malware scanning and security patches were used as an extra layer of defense.

Despite the numerous plugins and technological advances enjoyed by the clients of Web Infrastructure, many of the accessibility tweaks needed to meet WCAG 2.1 AAA requirements (mostly CSS styling) still had to be made by hand. In other words, accessible web development with WordPress was an evolving field with no prominent solution present in 2021.

Costs

Costs were kept low by using in-house expertise as well open-source technologies. Additionally, hardware was rented from reputable vendors for rates considerably below the price of leading cloud providers. This reduction in expense came at the non-monetary cost of increased management overhead and slightly reduced reliability. These non-monetary costs were met only through the use of in-house expertise. Labor was the largest infrastructure-related expense. Other expenses included:

- Servers.

- WordPress plugins.

- Google accounts (for cloud storage and email management).

- DNS registration.

- CDN usage.

Security

The infrastructure was not assessed for vulnerabilities by any third party. However, it was developed with security in mind. It is also worth noting that no malware nor intrusion was ever detected on any site. Most sites fell under a consistent but low attack rate by random ips; however, the security measures in place handled these with ease.

The safety of all servers relied on the encryption and unexploitable nature of tinc. If a vulnerability was discovered in Tinc itself, the entire infrastructure could have been exposed to a suite of possible exploits. It should be noted that such an increase in the size of the threat surface would not have guaranteed viable attack vectors to any other server on the VPN, it only increased the probability that an attack vector could be discovered.

The physical security of development servers was much more relaxed than that of production servers. If any dev server was physically accessed or otherwise compromised the attacker would have gained internal access to the VPN similarly to if they had broken through Tinc. This vulnerability was mitigated by taking development servers offline when they were no longer needed. Additionally, the Tinc public key of a node could have been removed from the rest of the servers which would have prevented that node from being able to connect to the VPN even if it still held the public keys of the other servers it was trying to access. This measure was not needed as no nodes were ever suspected of being compromised.

All production servers existed on the open internet and could have been targeted by denial of service attacks or direct attempts to break their firewalls. By running DNS through Cloudflare and then to a set of dedicated entry points, no single party except server admins should have been aware of the number, location, or ips of all servers involved. Combining this with global data redundancy, the single failure of any server should have had little to no effect on service availability. While all initial failure tests did pass, a larger budget and redundant clusters would have been required to ensure this was the case.

Third party code from a wide array of developers was introduced through each software component utilized. Very little of the code used was written in-house or subject to any in-house code reviews. If a backdoor was introduced in any of these tools (e.g. in the WordPress docker image) it could be leveraged to gain access to the infrastructure. It was thus necessary to trust in the good will of humanity and the code reviews performed by the open source community. The risk of this threat was minimized by using larger projects, such as Kubernetes and WordPress in the hopes that increased usage reduced the effectiveness of any malicious actors.

The use of automated updates further exacerbated the risk posed by third party software. In order to ensure tools received the latest security patches and remained impervious to discovered threats, the infrastructure was made vulnerable to malicious code injection by software maintainers. The time and resources necessary to review all code used on this project far exceeded any reasonable budget and was foregone.

The use of multiple hosting providers had both positive and negative impacts on security. If a single hosting provider was used, as would have been the case with a major cloud provider, the entire infrastructure would have been vulnerable to malicious actors within the hosting company. By purchasing servers from multiple hosting providers, the probability of encountering a malicious actor increased while the possible damage which that actor could do decreased. This tradeoff was treated the same as with the software used: servers were only rented from large, seemingly reputable companies.

All backups were stored on Google Drive. This meant that if a website was lost and Google’s data centers went offline or a malicious actor in Google deleted the backups, the only option to restore the site would be through the synchronized files on each worker node. For this reason file history was enabled on Syncthing so that each server could be used to restore any website's data to a previous state.

Reliability and History

The infrastructure was first developed in-house using several consumer-grade desktop computers. The use of fast data links between each server reduced RTT to around 7ms. This greatly increased the reliability of all software used primarily Kubernetes and Syncthing. It also allowed for experimentation with other data replication technologies, like Ceph (https://ceph.io/en/). Unfortunately, Ceph and all similar data center oriented technologies required strong consistency which made them fail under RTTs upward of 40ms. Due to the immaturity of geographic replication in Ceph (e.g. RBD Mirroring (https://docs.ceph.com/en/latest/rbd/rbd-mirroring)) and similar file systems these technologies could not have been used to build this infrastructure. Electing to use Syncthing and Kubernetes Host Path (https://kubernetes.io/docs/concepts/storage/volumes/#hostpath) adequately met the need of data replication at the cost of reliability as previously mentioned.

When initially developing the infrastructure, the consumer-grade machines experienced failures that were likely due to being overused as servers (e.g. RAM going bad). This drove the need for reliable hardware which, along with the need for low latency network connections, made renting servers the most viable option. Paying hosting providers for dedicated servers removed the need to pay for hardware as it failed and the redundancy in cluster design made it possible to take advantage of competitive rates and discounts on a continuous basis (i.e by decommissioning and provisioning servers more frequently). Thus, monetary costs were reduced by increasing the time spent on server management.

After initial development was complete and rented servers were provisioned, issues with reliability persisted for a time. As was already mentioned in Challenges, conflicts between WordPress plugins and infrastructure networking caused frequent denials of service. Additionally, the multimaster deployment that worked on LAN failed when servers went down over WAN which resulted in the cluster being redeployed with only a single control node. Beyond that, some servers simply stopped responding, and it became necessary to work with the hosting provider’s support teams to address these problems.

Toward the end of 2021, the infrastructure appeared stable and grew in usage as other partners began hosting their own websites on it. This drove the overall price down while adding very little to the time required to manage all sites and allowed economies of scale to spur improvement.

Future

The needs identified in 2021, which should be met in 2022 include:

- Faster server deployment.

- Better file synchronization.

- Security audit.

In short, the infrastructure layed out here worked, was affordable, and is planned to be continually maintained and improved. As humanity builds faster network links and more efficient data encryption and replication systems, the geographic redundancy of systems like this will grow providing faster and more reliable services for larger audiences.